by Kevin Klapak

This article explains the benefits of using Oracle's virtualization technology and walks through how to convert your current Oracle Solaris system into a non-global zone.

| Table of Contents

| |

| |

|

Oracle Optimized Solutions provide tested and proven best practices for how to run software products on Oracle systems. Learn more.

|

|

Introduction

During the process of upgrading your infrastructure—whether that's on premises to new hardware, off premises to the cloud, or a hybrid of both—is also a great time to look at your current system architecture and how you can get the most out of the physical resources that you currently have. The context of this article is Oracle's SPARC systems; however, the examples given are applicable whether your Oracle Solaris system is SPARC- or x86-based.

Oracle's Virtualization Technology

Oracle offers two complementary virtualization technologies: Oracle VM Server for SPARC (previously called Logical Domains or LDoms) and Oracle Solaris Zones. Oracle VM Server for SPARC is the hardware virtualization technology that provides the ability to carve up a server into LDoms running on a hypervisor that runs in the firmware. Oracle Solaris Zones technology, on the other hand, is a lightweight operating system virtualization technology provided by Oracle Solaris, and it can be used on both SPARC and x86 systems to separate applications in the user space.

Every SPARC T-Series or newer SPARC server from Oracle uses LDoms. Even if you don't partition your box into logical domains, you are still running one single large LDom, called the primary or control domain, encapsulating the complete server.

Every installation of Oracle Solaris, starting from Oracle Solaris 10, has one zone called the global zone where the shared kernel runs. Non-global zones are additional zones, which act as containers separating applications in the user space.

Why Would You Want to Use Oracle Solaris Zones?

Oracle Solaris Zones offer a wide range of benefits such as

- Flexibility. It is very simple to attach and detach zones from one global zone to another global zone on another machine.

- Security. If a non-global zone is ever compromised, an attacker is unable to affect other non-global zones or the global zone.

- Adherence to the container principle. You can separate your applications from each other and maintain a separate set of Oracle Solaris packages and other dedicated resources.

- A clean architecture. The global zone manages the resources between the zones, runs the kernel, does the scheduling, runs the cluster, manages the devices, etc. The Non-global Zones run the applications.

Why Would You Want to Use LDoms on SPARC Servers?

LDoms also provide as wide set of benefits, such as

- Ability to manage and isolate independent kernels. You might want to run different versions of Oracle Solaris 10 within a box. Or, you might want to run Solaris 10 and Solaris 11 right next to each other within a box.

- Maximized security and isolation. Completely separate operating systems and hardware resources provide a greater level of isolation than would be the case for Oracle Solaris Zones.

- Mixed access to devices. The LDoms software approach offers some flexibility in how devices can be accessed, including virtual access and direct access.

- Reduced licensing requirements. You need to reduce the number of virtual CPUs in a box for licensing reasons. LDoms are recognized as hard partitions by Oracle for license boundaries.

- Increased fault tolerance. You don't want your I/O to depend on a single service domain. You can build multipath groups of devices between two I/O devices providing service domains.

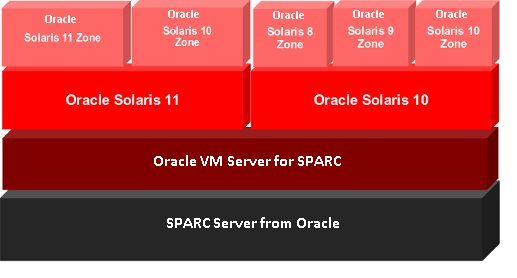

As you can see, these two technologies help fulfill, for the most part, two different set of needs and, therefore, complement each other well. The diagram below shows an example of a SPARC architecture running one Oracle Solaris 11 LDom and one Oracle Solaris 10 LDom with branded non-global zones on top, thus gaining all of the described benefits discussed in this article above.

Figure 1. SPARC architecture running one Oracle Solaris LDoms with branded non-global zones on top

The following example shows how you can convert your current Oracle Solaris system into a non-global zone to gain the benefits described above. Whether you are running an x86 Oracle Solaris global zone or a global zone within a SPARC LDom, these steps will work the same on both platforms.

How to Convert Your Oracle Solaris System into a Non-Global Zone

The following sections show the steps for migrating your system to a non-global zone.

Scan the Source System With zonep2vchk (Oracle Solaris 10 and Later Only)

The zonep2vchk tool, available only on Oracle Solaris 10 and later versions, will help identify any possible incompatibilities with moving your physical system into a non-global zone. The output of the following commands can also be piped into an output file (for example, zonep2vchk -b 11 > /net/_nfs_server_/p2v/output_command1.txt) to help diagnose any problems that might arise after the migration.

The command in Step 4 of this section shows how to output the zone configuration file to an NFS share of your choosing. This file will be used in later when you create the Oracle Solaris Zone on the target machine. For more information, see the zonep2vchk man page.

As an administrator, do the following:

-

Run the zonep2vchk tool with the -b option to perform a basic analysis that checks for Oracle Solaris features in use that might be impacted by a physical-to-virtual (P2V) migration.

source# zonep2vchk -b 11

-

Run the zonep2vchk tool with the -s option to perform a static analysis of application files. This inspects Executable and Linkable Format (ELF) binaries for system and library calls that might affect operation inside a zone.

source# zonep2vchk -s /opt/myapp/bin,/opt/myapp/lib

-

Run the zonep2vchk tool with the -r option to perform runtime checks that look for processes that could not be executed successfully inside a zone.

source# zonep2vchk -r 2h

This example is set to run for two hours, but that time can be reduced if needed.

-

Run the zonep2vchk tool with the -c option on the source system to generate a template zonecfg script named s11-zone.config.

source# zonep2vchk -c > /net/nfs_server/s11-zone.config

This template will contain resource limits and network configuration based on the physical resources and networking configuration of the source host.

Create an Archive of the System Image on a Network Device

The next task is to archive the file systems in the global zone. First, we need to verify that no non-global zones are installed on the source system. Depending on the type of zone brand, there are also limitations that are addressed below.

Multiple archive formats are supported for native zones, including cpio, pax archives created with the -x xustar (XUSTAR) format, and zfs. The examples in this section show two methods, zfs send and archiveadm create, for creating archives. The first example assumes the root pool is named rpool. For Oracle Solaris versions prior to Oracle Solaris 10, an example is provided of how to create Flash Archives using the flarcreate command.

Oracle Solaris Unified Archives are the recommended method to archive Oracle Solaris. However, if you are running a version of Oracle Solaris older than Oracle Solaris 11, an alternative method is provided below for archiving your system, because Unified Archives are not supported in earlier versions.

Also, as a side note, if you plan on using kernel zones, they accept only Unified Archives as an archive installation method.

As an administrator, use one of the following procedures, depending on your environment.

To archive Oracle Solaris 8 or Oracle Solaris 9:

Create an Oracle Solaris Flash Archive and either save or copy it to an NFS share:

source# flarcreate -n s8_host /net/nfs_server/s8_host.flar

To archive Oracle Solaris 10:

-

Create a snapshot of the entire root pool, named rpool@p2v in this procedure:

source# zfs snapshot -r rpool@p2v

-

Destroy the snapshots associated with swap and dump devices, because these snapshots are not needed on the target system:

source# zfs destroy rpool/swap@p2v

source# zfs destroy rpool/dump@p2v

-

Generate a ZFS replication stream archive that is compressed with gzip and stored on a remote NFS server:

source# zfs send -R rpool@p2v | gzip > /net/nfs_server/s11-zfs.gz

Alternatively, you can avoid saving intermediate snapshots—and thus reduce the size of the archive—by using the following command:

source# zfs send -rc rpool@p2v | gzip > /net/nfs_server/s11-zfs.gz

Recommended method to archive Oracle Solaris 11 (including Kernel Zones):

Generate a Unified Archive (UAR) and stored it on a remote NFS server:

source# archiveadm create -r -z s11-host /net/nfs_server/s11-host.uar

Configure the Zone on the Target System

The template zonecfg script generated by the zonep2vchk tool defines aspects of the source system's configuration that must be supported by the destination zone configuration. Additional target system–dependent information must be manually provided to fully define the zone.

If your source system is an older than Oracle Solaris 10, which does not support the zonep2vchk tool, you can either create your own configuration script or manually configure the zone using the zonecfg command. See Step 4 below for a partial example of a zonecfg script or view the zonecfg man page for more details.

As an administrator, do the following:

-

Review the contents of the zonecfg script to become familiar with the source system's configuration parameters:

target# less /net/nfs_server/s11-zone.config

We will use the configuration file s11-zone.config, which was created earlier. The initial value of zonepath in this script is based on the host name of the source system. You can change the zonepath directory if the name of the destination zone is different from the host name of the source system.

Commented-out commands in the configuration file reflect parameters of the original physical system environment, including memory capacity, the number of CPUs, and network card MAC addresses. These lines may be uncommented for additional control of resources in the target zone.

-

Use the following commands in the global zone of the target system to view the current link configuration:

target# dladm show-link

LINK CLASS MTU STATE OVER

net3 phys 1500 up --

net2 phys 1500 up --

net1 phys 1500 up --

net0 phys 1500 up --

aggr1 aggr 1500 up net0 net2

aggr1_vnic1 vnic 1500 up aggr1

aggr1_vnic2 vnic 1500 up aggr1

target# dladm show-phys

LINK MEDIA STATE SPEED DUPLEX DEVICE

net3 Ethernet up 10000 full i40e3

net2 Ethernet up 10000 full i40e2

net1 Ethernet up 10000 full i40e1

net0 Ethernet up 10000 full i40e0

target# ipadm show-addr

ADDROBJ TYPE STATE ADDR

lo0/v4 static ok 127.0.0.1/8

lo0/v6 static ok ::1/128

-

Copy the zonecfg script to the target system:

target# cp /net/nfs_server/p2v/s11-zone.config .

-

Use a text editor such as vi to make any changes to the configuration file:

target# vi s11-zone.config

By default, the zonecfg script defines an exclusive-IP network configuration with an anet resource for every physical network interface that was configured on the source system. The target system automatically creates a VNIC for each anet resource when the zone boots. The use of VNICs makes it possible for multiple zones to share the same physical network interface. The lower-link name of an anet resource is initially set to change-me by the zonecfg command. You must manually set this field to the name of one of the data links on the target system. Any link that is valid for the lower link of a VNIC can be specified.

As you can see from the command output in Step 2, the target has an aggregate link of net0 and net2 called aggr1. We will set lower-link=aggr1 so that the zone VNIC is created on the proper link of the target machine. The zone configuration file also has a second anet called net6, which represents the link to Oracle Integrated Lights Out Manager (Oracle ILOM). This anet can be removed or altered; if it is removed, you will need to remove the corresponding ipadm interface once the zone is booted.

Additional zonecfg commands can be added to the s11-zone.config script to add resources or set other attributes, such as brand type.

As an example, instead of creating an Oracle Solaris Native Zone, you can create an Oracle Solaris Kernel Zone by adding/editing the green lines below and removing (by commenting out) the red lines below:

#set zonepath=/system/zones/%{zonename} Can't set zonepath, ip-type,

#set ip-type=exclusive max-processes, max-lwps, with

#set max-processes=20000 solaris-kz brand

#set max-lwps=40000

set brand=solaris-kz

add capped-memory solaris-kz needs capped-memory set

`set physical=4G`

`end`

add anet

`#set linkname=net0` Can't set anet linkname with solaris-kz

`set lower-link=aggr1`

`end`

add device solaris-kz needs a bootable device

`set match=/dev/rdsk/diskname` (see [note]([#note](https://forums.oracle.com/ords/apexds/domain/dev-community?tags=note)) below)

`set bootpri=0`

`end`

A kernel zone provides a full kernel and user environment within a zone, and it also increases kernel separation between the host system and the zone, whereas a native zone leverages the kernel of the global zone. Save this altered zonecfg file as s11-kernel-zone.config.

Note: For the kernel zone's boot device

- The full storage device path (for example, /dev/rdsk/c9t0d0) must be specified.

- The storage device must be defined by only one of the following:

+ The add device match resource property. If you specify a storage device for the add device match resource property, you must specify a device that is present in /dev/rdsk, /dev/zvol/rdsk, or /dev/did/rdsk.

+ A valid storage URI.

- The storage device must be a whole disk or LUN.

-

Use one of the following zonecfg commands to configure the zone.

To configure an Oracle Solaris 11 Native Zone:

target# zonecfg -z s11-zone -f s11-zone.config

To configure an Oracle Solaris 11 Kernel Zone:

target# zonecfg -z s11-kernel-zone -f s11-kernel-zone.config

-

Use the zonecfg command to view and verify the zone properties prior to installation.

If you need to change the zone configuration further, you can use the zonecfg command to edit the zone or just to verify the properties prior to installation. Below is an example of the kernel zone output.

target# zonecfg -z s11-kernel-zone info

zonename: s11-kernel-zone

brand: solaris-kz

autoboot: false

autoshutdown: shutdown

bootargs:

pool:

scheduling-class:

hostid: 0x1d848bd1

tenant:

anet:

lower-link: aggr1

allowed-address not specified

configure-allowed-address: true

defrouter not specified

allowed-dhcp-cids not specified

link-protection: mac-nospoof

mac-address: auto

mac-prefix not specified

mac-slot not specified

vlan-id not specified

priority not specified

rxrings not specified

txrings not specified

mtu not specified

maxbw not specified

bwshare not specified

rxfanout not specified

vsi-typeid not specified

vsi-vers not specified

vsi-mgrid not specified

etsbw-lcl not specified

cos not specified

pkey not specified

linkmode not specified

evs not specified

vport not specified

iov: off

lro: auto

id: 0

device:

match: /dev/rdsk/c0t600144F0A44E7D8600005990DA06002Bd0

storage not specified

id: 1

bootpri: 0

capped-memory:

physical: 4G

attr:

name: zonep2vchk-info

type: string

value: "p2v of host oos-s7-2l-04"

attr:

name: zonep2vchk-num-cpus

type: string

value: "original system had 128 cpus: consider capped-cpu (ncpus=128.0) or dedicated-cpu (ncpus=128)"

attr:

name: zonep2vchk-memory

type: string

value: "original system had 522496 MB RAM and 8191 MB swap: consider capped-memory (physical=522496M swap=530687M)"

Install the Zone on the Target System

This example does not alter the original system configuration during the installation.

As an administrator, do the following.

-

Install the zone using the archive created on the source system.

To install an Oracle Solaris Native Zone:

- Using an Oracle Solaris 8 Flash Archive and without preserving the system configuration:

target# zoneadm -z s8-zone install -u -a /net/nfs_server/s8_host.flar

- Using an Oracle Solaris 10 ZFS streaming archive and preserving the system configuration:

target# zoneadm -z s10-zone install -p -a /net/nfs_server/s10-zfs.gz

- Using an Oracle Solaris 11 Unified Archive (the configuration is preserved by default):

target# zoneadm -z s11-zone install -a /net/nfs_server/s11-host.uar

Note: To retain the sysidcfg identity from a system image that you created without altering the image, use the -p option after the install subcommand. To remove the sysidcfg identity from a system image that you created without altering the image, use the -u option. The system unconfiguration occurs in the target zone. For more information, see the zoneadm man page.

To install an Oracle Solaris 11 Kernel Zone:

target# zoneadm -z s11-kernel-zone install -a /net/nfs_server/s11-host.uar

-

Boot and log in to the newly created zone, for example:

target# zoneadm -z s11-zone boot

target# zlogin -C s11-zone

Conclusion

As you can see from the example above, the process of moving your Oracle Solaris system into a non-global zone is quite straightforward. Depending on your application and the corresponding output of zonep2vchk, there might be additional post-configuration steps; however, that is heavily dependent on what is running on your system. By using Oracle's virtualization technologies, especially Oracle Solaris Zones, you get higher server utilization, attain better application isolation and security, and achieve a more efficient and clean system architecture.

About the Author

Kevin Klapak is a technical product manager on the Oracle Optimized Solutions team. He has a computer science background, and since joining Oracle, he has been working on projects related to data migration, systems security, and cloud computing.