All,

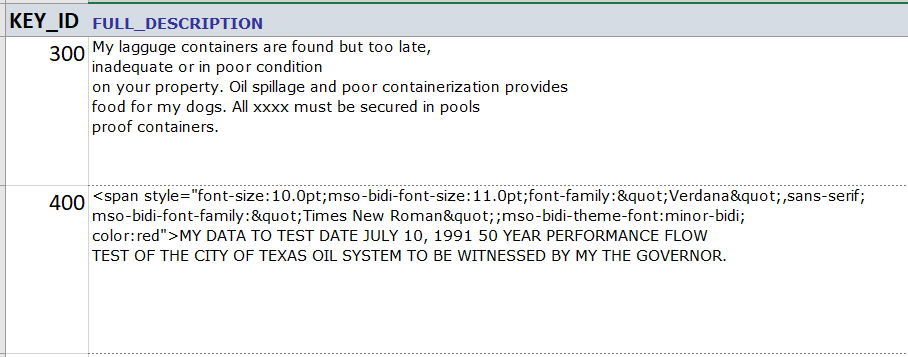

iam having problem loading .csv file via my external table sample below - the problem is actually full_description column which contains all sorts of characters (",$<>!@%^&* etc) including non displayable values set:

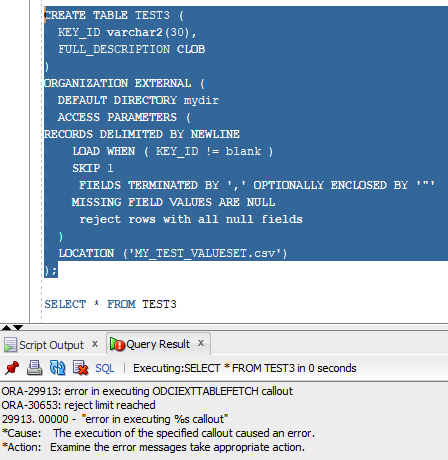

PART 1:

CREATE TABLE TEST3 (

KEY_ID varchar2(30),

FULL_DESCRIPTION CLOB

)

ORGANIZATION EXTERNAL (

DEFAULT DIRECTORY mydir

ACCESS PARAMETERS (

RECORDS DELIMITED BY NEWLINE

LOAD WHEN ( KEY_ID != blank )

SKIP 1

FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY '"'

MISSING FIELD VALUES ARE NULL

reject rows with all null fields

)

LOCATION ('MY_TEST_VALUESET.csv')

);

PART 2:

PART 3 - data set:

How do i process these characters ?

Iam just surprised some of these api like SQLLDR have been used for many year but still have critical weakness like processing Clob/large data field, external table cannot process CLOB yet that's Oracle supported data type.

using Oracle 12.2g Enterprise.

thanks in advance.