Hi OGG experts,

I am designing and implementing OGG(Version 12.2.0.2.2 OGGCORE_12.2.0.2.0_PLATFORMS_170630.0419_FBO)to capture ddl data ( include alter、drop、rename operations) from oracle 11g, and send to kafka by oracle bigdata , but ogg cannot obtain ddl statement only have table schema , therefore I create a trigger to get ddl statement, and insert to a new table, and table structure as below:

CREATE TABLE ddl_change_log (

id varchar2(50) default SYS_GUID() not null primary key,

db_name VARCHAR2(100),

table_name VARCHAR2(100),

ddl_sql VARCHAR2(4000),

ddl_timestamp TIMESTAMP

);

trigger definition:

CREATE OR REPLACE TRIGGER ddl_trigger

AFTER ALTER OR RENAME OR COMMENT ON SCHEMA

DECLARE

v_db_name VARCHAR2(100) := SYS_CONTEXT('USERENV', 'CURRENT_SCHEMA');

v_table_name VARCHAR2(100);

v_ddl_sql VARCHAR2(1024);

v_sql_out ORA_NAME_LIST_T;

BEGIN

v_table_name := ORA_DICT_OBJ_NAME;

FOR i IN 1..ORA_SQL_TXT(v_sql_out) LOOP

v_ddl_sql := v_ddl_sql || v_sql_out(i);

END LOOP;

INSERT INTO ddl_change_log(db_name, table_name, ddl_sql, ddl_timestamp)

VALUES(v_db_name, v_table_name, v_ddl_sql, SYSTIMESTAMP);

END;

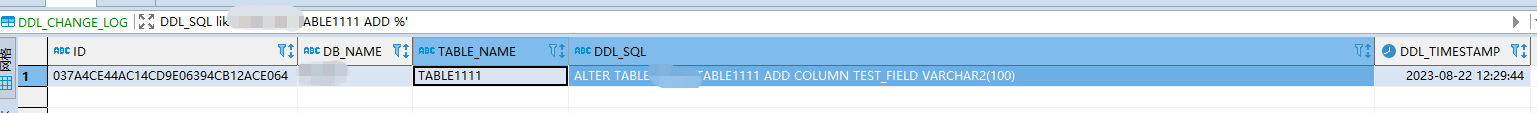

and now, I try to add column that named test_field and data type was varchar2(100) to another table, the ddl_change_log table insert a row data correctly:

kafka consumer had nothing, but I insert a row data into

kafka consumer had nothing, but I insert a row data into ddl_change_log without trigger, I found kafka consumer had received a message like this:

{"table":"xxx.DDL_CHANGE_LOG","op_type":"I","op_ts":"2023-08-22 15:49:23.993438","current_ts":"2023-08-22T15:49:30.722000","pos":"00000000210000425372","primary_keys":["ID"],"after":{"ID":"037A4CE44AC24CD9E06394CB12ACE064","DB_NAME":"xxx","TABLE_NAME":"TABLE1111","DDL_SQL":"ALTER TABLE xxx.TABLE1111 ADD COLUMN TEST_FIELD_COPY VARCHAR2(100)","DDL_TIMESTAMP":"2023-08-22 12:29:44.321805000"}}

Obviously, It works, I have no idea the difference between sql insert and insert data in trigger.

Thanks a lot for any help you can provide.