I have a an external table in which I used dbms_cloud.create_external table which sources from a huge file from a national insurance provider (meaning I cannot adjust/manipulate the underlying file).

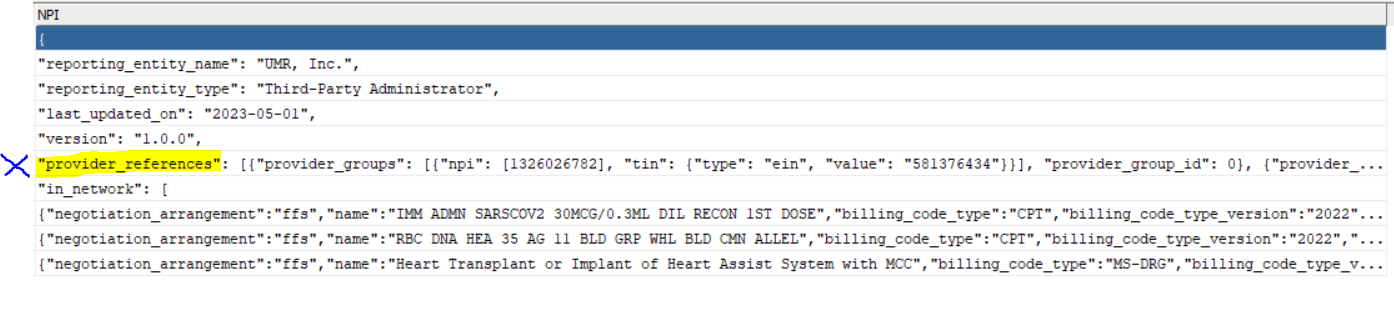

My table is just one CLOB and over 70,000,000 characters for the column. I am trying to filter to the record shown below that starts with provider_references.

I tried using this:

Select

NPI

FROM PT_JSON3

WHERE

dbms_lob.substr(NPI,21,1) = '"provider_references"'

However, I had this running for 3 days and it never finished because the CLOB is so long.

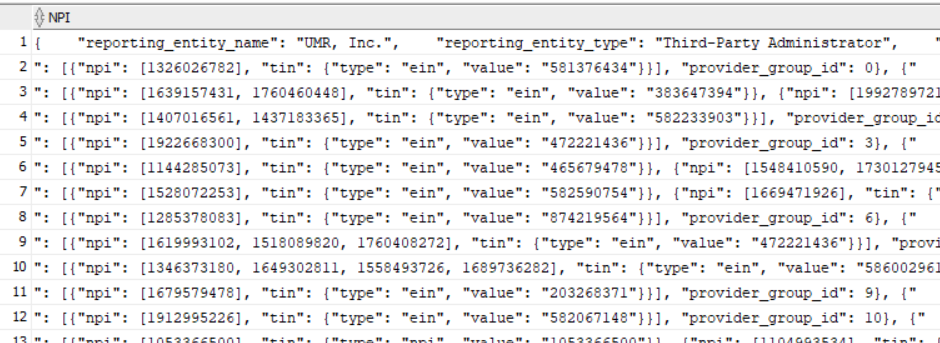

I figure this is always going to be a problem because of the data source, so I tried a new method with another external table. I parsed by provider_groups which makes up the delimiter for that specific record. The result is this:

I can work with the data in this format, but there is one big issue. The rest of the records in that original file are lumped as one giant text at the end of this table resulting in a buffer size issue.

When I run (where clause to filter to second record in table):

SELECT NPI FROM v_pt_provider

WHERE NPI = '": [{"npi": [1326026782], "tin": {"type": "ein", "value": "581376434"}}], "provider_group_id": 0}, {"'

I get this error:

ORA-29913: error in executing ODCIEXTTABLEFETCH callout

ORA-29400: data cartridge error

KUP-04020: found record longer than buffer size supported, 200003584, in https://objectstorage.us-ashburn-1.oraclecloud.com/n/idfb1vorcnhr/b/RGObjectStorage/o/large.json (offset=68183326)

which makes sense because of enormous data dump in the rest of the records being lumped together.

I tried inserting data into a new table using this:

create table pt_provider

As

SELECT

NPI

FROM V_PT_PROVIDER

WHERE length(NPI) < 20001

but I got the same buffer size error.

Is there a way to insert the records into another table while ignoring the invalid record at the end that causes the buffer size issue? I was hoping the where clause with length less than 20001 would handle that, but it does not.

Any ideas?